They may sound like Sesame Street characters or Imperial vessels from Star Wars, but LLMs from BERT and Ernie to Falcon 40B, Galactica and GPT-4 are changing the way we interact with technology.

However, the true power of LLMs extends beyond their initial training. Fine-tuning, a critical process for enhancing the model’s performance on specific tasks, is what transforms a general-purpose LLM into a specialized tool.

In this article we’ll explore LLM fine-tuning, with a specific focus on leveraging human guidance in LLM fine-tuning to outmaneuver competitors and create a long-term sustainable advantage.

LLM fine-tuning is the process of refining a pre-trained model for specific tasks or datasets. This contrasts with the initial training phase, where the model learns from a vast, general dataset. During fine-tuning, the model’s parameters are adjusted using a smaller, task-specific dataset, enabling it to perform better in particular scenarios.

For instance, while a model like GPT-3 is trained on diverse internet text to understand and generate human language, fine-tuning it with legal documents would make it adept at interpreting legal language. This specificity is essential in applications like automated contract analysis or legal research assistance.

Incorporating methods like RAG (Retrieval-Augmented Generation) can further boost the fine-tuning of LLMs and improve results. RAG combines a retrieval system (like a search engine) with a generative language model to enhance responses with externally retrieved information. This approach significantly improves the accuracy and relevance of answers, particularly for queries requiring specialized or current knowledge.

To fine tune LLM models also involves balancing the model’s general knowledge with the nuances of the target domain. This customization is critical in achieving the desired level of accuracy and relevance in the model’s output. A model that is not customized or fine-tuned will provide adequate information on a general level, as this is what it has been trained for – however in order to provide specific, valuable information about a niche topic, further fine-tuning or customization is needed.

For example, in the world of legal documents, a regular model like GPT-3, trained on diverse texts, can draft a basic legal document but may lack nuanced language and specialized knowledge. In contrast, a fine-tuned model, specifically trained on a comprehensive collection of legal texts and focused on a particular legal area, such as U.S. intellectual property law, would produce more relevant and contextually appropriate documents, including up-to-date terminology and jurisdiction-specific clauses. This clearly shows how a fine-tuned LLM model can provide a more precise, valuable, and efficient tool for legal professionals.

Interestingly, fine-tuned smaller models can outperform larger models that have not been fine-tuned.

How to fine-tune LLMs

The pre-trained model, which already has a broad understanding of language from its initial training, is exposed to new data that reflects the specific context or subject matter you want it to specialize in. This process typically involves setting up a training regime where the model is fed examples from a new dataset, and its parameters are adjusted to reduce the error in its predictions for this specific type of data. Training techniques include reinforcement learning, imitation learning, self-training and meta-learning.

How much data is needed for LLM fine-tuning?

The amount of data required for fine-tuning can vary widely depending on the complexity of the task and the specificity of the domain. For simpler tasks or closely related domains, a few hundred examples might be sufficient. However, for more complex or specialized tasks, thousands or even hundreds of thousands of examples might be needed. The key is that the data should be representative of the kind of tasks the model will perform after fine-tuning.

Can fine-tuning ruin the model’s initial training?

In a nutshell, yes. Also known as “catastrophic forgetting,” the model can “forget” what it learned during initial training. This can be mitigated with techniques like regularization, using a sufficiently diverse fine-tuning dataset, and recursive self-distillation.

LLMs are versatile tools that find applications across multiple industries, each with its unique language and data characteristics. In healthcare, for example, LLMs can be fine-tuned to understand medical terminologies and patient histories, assisting in diagnostics and treatment suggestions. A recent article from Stanford highlighted the promise and pitfalls of such technologies, particularly the need for fine-tuned LLMs. The authors discuss “how the medical field can best harness LLMs – from evaluating the accuracy of algorithms to ensuring they fit with a provider’s workflow. Simply put, the medical field needs to start shaping the creation and training of its own LLMs and not rely on those that come pre-packaged from tech companies.”

In the financial sector, firms like JPMorgan Chase have leveraged fine-tuned models for financial analysis, extracting insights from financial reports and market news. This fine-tuning helps in making more informed investment decisions and understanding market trends. Without fine-tuning, one is to some degree relying on “the wisdom of the crowd” in general on the internet; with fine-tuning however, one can access wisdom from advanced finance sources, giving more in-depth, accurate and meaningful information. This also shows how there is a place for fine-tuned models, and a place for general models; the average person might not want or understand the complex finance concepts that a fine-tuned model may provide. There are even open-source versions such as FinGPT from AI4Finance.

Similarly, in customer service, fine-tuning enables LLMs to understand and respond to customer queries more effectively. By training on specific product or service-related queries, these models can provide more accurate and helpful responses, improving customer experience. If the model has not been specifically trained, there is a danger of suggesting just plain wrong answers, or even recommending competitors’ products.

These examples show the importance of fine-tuning LLMs, tailoring their capabilities to specific industry needs and enhancing their functionality in real-world applications.

Fine-tuning an LLM is a process that involves several technical considerations. The first step is selecting an appropriate pre-trained model (selecting an appropriate model and the differences between such models when it comes to fine tuning are beyond the scope of this piece) that aligns with the target task. For instance, models like BERT and GPT-3, known for their robust general language understanding, are popular choices for fine-tuning.

Training large language models typically involves adjusting the model’s learning rate, a parameter that determines how much the model changes in response to the error it sees. A smaller learning rate is usually preferred to make incremental adjustments, preventing the model from overfitting to the fine-tuning data.

Regular evaluation is crucial during fine-tuning. This involves testing the model on a separate validation dataset to monitor its performance and make necessary adjustments.

Fine-tuning Large Language Models (LLMs) involves several challenges that can impact the effectiveness and applicability of the models. Some of the primary challenges include:

Let’s say you’re fine-tuning an LLM for sentiment analysis to analyze customer feedback using the OpenAI API. The cost structure would look something like this: for the training phase, assuming the use of around 500,000 tokens for training and validation, and with OpenAI’s pricing ranging between $0.02 to $0.06 per 1,000 tokens, the estimated cost for training would be approximately $20 (calculated at an average rate of $0.04 per 1,000 tokens). Post-training, if the model is used to predict sentiment for about 100,000 pieces of feedback each month, with an average length of 50 tokens per feedback, this translates to 5,000,000 tokens monthly. At the same rate, this would result in a monthly recurring cost of around $200. This excludes other potential expenses such as data storage and transfer, and it may vary with the actual data complexity, usage volume, and other overheads.

These challenges highlight the need for careful planning, resource allocation, ongoing management and oversight, and implementing best practices in the fine-tuning process of LLMs.

Effective LLM fine-tuning requires adherence to several best practices. Perhaps the most important best practice when it comes to fine tuning in machine learning is ensuring the quality of the training data.

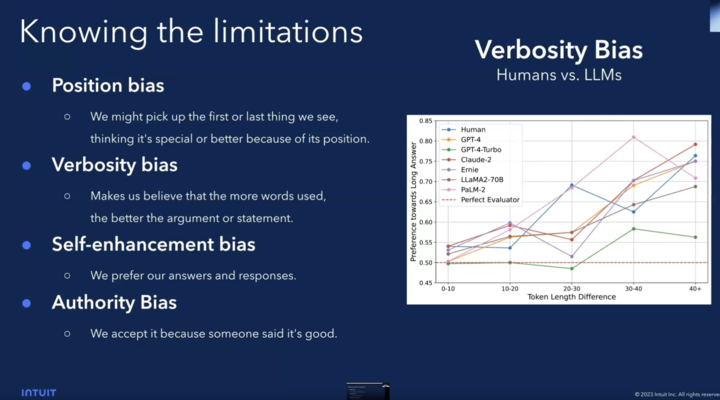

The effectiveness of fine-tuning largely depends on the quality and relevance of the training data. And while various evaluation methods can be used in evaluating results, there is general consensus that human experts are essential in curating and annotating datasets to ensure they are well-suited for the specific tasks and domains the LLM is being fine-tuned for.

What’s more, human guidance can ensure:

Overall, human guidance is a key requirement of constantly improving LLM fine-tuning because of the critical contextual understanding and interpretation it adds.

The field of LLM fine-tuning is continually evolving with emerging trends like few-shot learning and transfer learning gaining prominence. These approaches allow for efficient LLM fine-tuning with limited data, broadening the scope of LLM applications.

LLM fine-tuning is crucial for unlocking the full potential of advanced models. By tailoring LLMs to specific tasks and industries, we can harness their capabilities to solve complex problems and innovate in various domains.

A key requirement of constantly improving LLM fine-tuning is incorporating human guidance. This can take your LLM fine-tuning to the next level, ensuring a model that is, in the words of Sam Altman, “capable, useful and safe.”

To get human guidance for your LLM fine-tuning that is infinitely scalable, entirely flexible, extremely accurate and completely seamless, look no further than Tasq.ai.

The 57% Hallucination Rate in LLMs: A Call for Better AI Evaluation Author: Max Milititski

In a recent webinar, we showed how Tasq.ai’s LLM evaluation analysis led to a 71%

In the bustling world of ecommerce, where every click, view, and purchase leave a digital