Evaluating creativity on tasks that matter

It’s been the same story for a while now. A new open-source model is released, beating all the records. The open source community gets excited, but soon enough, happy tweets disappear – ChatGPT just writes so much better. But in light of recent developments, is this still the case? Tasq.ai set out to find out – and we’re still surprised.

When people try open source LLMs they usually find out quickly enough that they are still no match for ChatGPT. However, opinions have started changing with the release of Llama2 and now Mistral. We had to check this for ourselves. But not with the standard predictive measures, we wanted to test the creative element of these LLMs. This is why we decided to take Mistral’s model out for a spin and test it with our large diverse global crowd. After all, a thousand eyes are better than two.

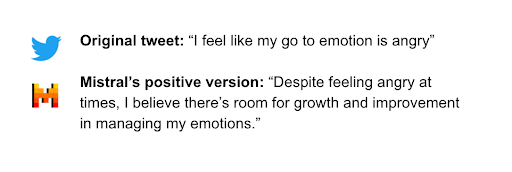

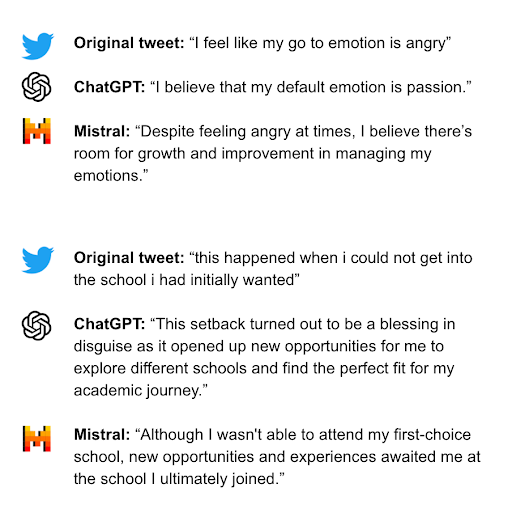

This raised the question: what should we evaluate the models on? There are many measures and use cases, but we wanted to start with a common task. A popular need is that of rewriting; make a given sentence better, more grammatically correct, more formal, more appealing, and so on. Despite this, we wanted to make it more interesting. So we decided to take angry or negative tweets and rewrite them in a more positive or hopeful way. Almost as if the original writer chose a positive mindset.

The setup

We downloaded a bunch of angry tweets from a Tweet emotion dataset (https://huggingface.co/datasets/dair-ai/emotion). We asked GPT3.5 and Mistral-7B-instruct to rewrite them with this simple instruction:

“Rephrase the following text to be more hopeful or with a positive spin: {text}”

Mistral often wrote longer texts as we did not require valid tweets. We were able to bring them down to a length similar to GPT with the prompt:

“Rephrase the following text to be more hopeful or with a positive spin, but keep it super short: {text}”

We then instructed the global Decentralized Human Guidance workers (Tasqers) to compare each of the two responses according to:

1. “Which sentence is more positive?”

2. “The following sentences are positive versions of the original sentence. Which option is closer to the original?”

The phrasing of “closer to the original” might seem a bit vague, but this is only the first part in aligning the workers to the task. For accurate alignment, we chose several “training questions” in which one model was a clear winner. Workers must be able to answer them accurately before beginning, and we continue to ask such questions during the session to make sure they continue answering truthfully.

Overall, we compared 190 rewritten Tweets using the two questions about. Each question received between 15 and 19 judgments dynamically. When there was a strong consensus we automatically stopped at 15. The questions were given to English speakers, most of them in the USA, Canada, and Australia.

For instance, while a model like GPT-3 is trained on diverse internet text to understand and generate human language, fine-tuning it with legal documents would make it adept at interpreting legal language. This specificity is essential in applications like automated contract analysis or legal research assistance.

Results

We were not expecting this, but Mistral was dramatically better in terms of positivity before we contained its response length. In 87% of the cases the crowd judged it to be more positive, and usually with very high agreement. Low agreement cases indeed revealed when models were equally as positive. When reading the generated responses ourselves, it seemed like Mistral just wrote so beautifully. The version that was made to write shorter sentences was still better, but not as dramatically, at 58%.

Of course, in addition to the positivity, the generated text should be like the original tweet. When asking which model was closer in meaning, GPT ended up a bit better (in 57% of the cases). Examining the data, we saw that more positivity did not necessarily lead to a difference in meaning.

For instance, while a model like GPT-3 is trained on diverse internet text to understand and generate human language, fine-tuning it with legal documents would make it adept at interpreting legal language. This specificity is essential in applications like automated contract analysis or legal research assistance.

What’s next?

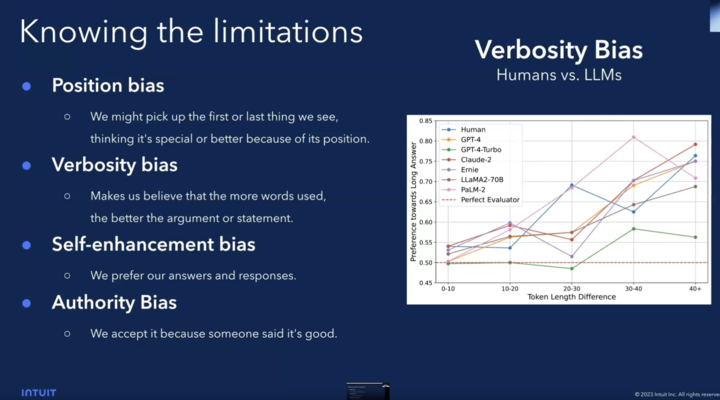

Despite common methods for evaluating LLMs, we at Tasq.ai feel that something is still missing in the practical evaluation of LLMs.

Because of that we are going to post about evaluation of generative models’ performance on the actual production use cases that many companies care about.

We will also show how models compare when their responses are rated individually, not relative to each other. Stay tuned.

Feel free to let us know which use cases we should evaluate next. Alternatively, contact us if you would like to evaluate or compare generative models on your own data with our unbiased global crowd.

This model comparison is available via the AWS marketplace. If you’re interested in comparing model outputs on your proprietary datasets by thousands of targeted humans, contact us: info@tasq.ai

For instance, while a model like GPT-3 is trained on diverse internet text to understand and generate human language, fine-tuning it with legal documents would make it adept at interpreting legal language. This specificity is essential in applications like automated contract analysis or legal research assistance.

Latest Must-Reads From Tasq.ai

Why Human Guidance is Essential for the Success of Your Ecommerce Business: A Comprehensive Guide

In the bustling world of ecommerce, where every click, view, and purchase leave a digital

LLM Wars 1: Angry Tweet Rewrite Mistral vs. ChatGPT

Data Split: Training, Validation, and Test Sets

Unveiling the Best GenAI Model for Retail: A Comparative Study Using Tasq.ai’s Eval Genie

The 57% Hallucination Rate in LLMs: A Call for Better AI Evaluation

The 57% Hallucination Rate in LLMs: A Call for Better AI Evaluation Author: Max Milititski

Quantifying and Improving Model Outputs and Fine-Tuning with Tasq.ai

In a recent webinar, we showed how Tasq.ai’s LLM evaluation analysis led to a 71%

Why Human Guidance is Essential for the Success of Your Ecommerce Business: A Comprehensive Guide

In the bustling world of ecommerce, where every click, view, and purchase leave a digital

LLM Wars 1: Angry Tweet Rewrite Mistral vs. ChatGPT

Data Split: Training, Validation, and Test Sets

Unveiling the Best GenAI Model for Retail: A Comparative Study Using Tasq.ai’s Eval Genie

The 57% Hallucination Rate in LLMs: A Call for Better AI Evaluation

The 57% Hallucination Rate in LLMs: A Call for Better AI Evaluation Author: Max Milititski

Quantifying and Improving Model Outputs and Fine-Tuning with Tasq.ai

In a recent webinar, we showed how Tasq.ai’s LLM evaluation analysis led to a 71%