Artificial intelligence (AI) and machine learning (ML) are advancing at an astounding pace, much faster than anyone could have foreseen just a decade-or-so ago.

While this is great news for technology, it means that data scientists and ML engineers often find themselves in a situation where they don’t have enough real data that they can use for training and developing their ML models, either because it doesn’t exist or because confidentiality and privacy limitations prevent it.

To overcome this problem, they’re more frequently turning to synthetic data.

What is synthetic data?

Synthetic data is annotated information that computer algorithms generate as an alternative to real-world data. It’s artificially made and created in digital worlds rather than collected from the real world.

The use of synthetic data is quickly on the rise, with latest estimates predicting that by 2024, 60% of the data used to develop AI, ML, and analytics projects will be synthetically generated.

Why synthetic data is important

Synthetic data is important because it can be readily generated to meet needs or solve problems and challenges that would otherwise stand in the way of progress. Examples of such problems might include:

- Privacy requirements in areas like healthcare which limit the availability of data.

- The cost of generating real-world data outweighs the benefits of an ML model.

- Where the data needed for a project doesn’t exist or is very difficult to obtain.

Generating synthetic data that reflects real-world data can solve these problems. It’s a powerful, inexpensive solution that can support the development of ML models without compromising on issues like quality and privacy.

Synthetic data challenges

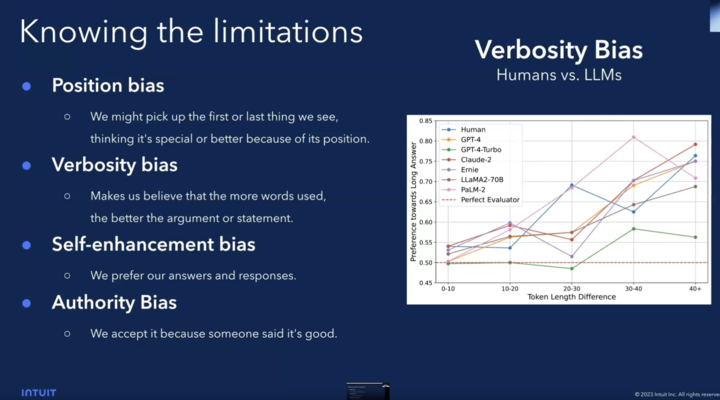

While synthetic data has numerous use cases and applications, there are still some challenges that are important to be aware of, such as the potential for ML models trained on synthetic data to exhibit more biases or for inconsistencies that can be generated while replicating the complexities of real data.

There’s also the challenge of trust. While the use of synthetic data is on the rise, some are still hesitant when it comes to adoption because they don’t see it as valid. There’s very much an attitude of “Well, it’s not real data, so it can’t be trusted”.

Improving the acceptance and general trustworthiness of synthetic data is therefore of paramount importance to the synthetic data companies that make it. But to do this, they must prove beyond doubt that their generative ML models and the synthetic data that they create are of unquestionably high quality. There’s also the challenge of quantifying quality: How can they measure improvement of the model? And more importantly, how can they detect regression?

That’s why we’ve created a flexible survey tool that addresses these challenges by enabling clients to design and conduct experiments that asks the crowd to rank how realistic modified images appear to them on a scale of 1 to 10.

Based on the results, clients can evaluate and measure the performance of each version of their ML model and identify cases where the model can be improved.

The more images that are given a score between 8 and 10 (i.e., they appear ‘real’ to our global diverse unbiased crowd of Tasqers) the better the client’s ML model is at generating synthetic image datasets. In contrast, the more images that are given a low score, the worse the ML model is at this task, which tells clients that their ML models need to be improved.

Synthetic data ranking in practice

The task of ranking a client’s artificially-generated images is completed through the Tasq platform, where millions of users are assigned to work on ranking projects.

This presented us with a challenge of our own – we need to be able to screen out incompetent labelers without impacting on how other users rank images, to avoid skewing results.

Evaluating the accuracy level and intent of a data labeler is relatively simple in use cases of an image annotation project for a computer vision ML model. For example, in a self-driving car environment, if a user labels a human as a tree, they are clearly wrong.

In the use case of ranking synthetic image data, however, there are no right or wrong answers because everything is far more subjective; one user could perceive an image to be authentic whereas another user could detect a slight defect and reject it as a result.

We solved this problem in a couple of ways:

- By training the Tasqers on clear cases where images are either i) obviously real or ii) obviously corrupt. Labelers who fail to correctly identify these images are disqualified from participating in surveys.

- By accepting a range of answers. Image rankings of between 8 and 10 will be accepted as good whereas rankings of between 0 and 3 will be processed as corrupted.

The end result of this ranking process is more confidence for our clients that their ML models are working as intended and generating robust synthetic data sufficient for training purposes. This is because clients are able to test the quality of their ML models, test them before deploying to production, and catch issues and mistakes in a timely manner.