To ensure AI’s future is responsible, we must ask off-putting ethical questions. In this article, we aim to introduce the ethics of AI and explore how AI ethics must align with human ethical principles to be adopted by society at largeToday, Artificial Intelligence (AI) plays an important role in the lives of billions of people around the world. The use of these complex systems has raised fundamental concerns about what we should do with these systems, what those systems should do, what risks they entail, and how we can control them.

Rapid advances in AI and Machine Learning have a plethora of benefits. However, to avoid negative risks and consequences of AI implementation in society, we must investigate all ethical, social, and legal aspects of its ecosystem.

The concentration of technological, economic, and political power in the hands of the top five players – Google, Meta, Microsoft, Apple, and Amazon – gives them undue influence in areas of society relevant to opinion-building in democracies, including governments, civil society, journalism, and most importantly, science and research. It is these companies that monopolize the best and brightest human capital as well as other critical resources. In this way, many global companies, including small and midsize enterprises, are handicapped in their attempts to compete.

Advances over the last decade have allowed them to monitor users’ behavior, recommend news, information, and products to them, and most of all, target them with ads. In 2020, Google’s ads generated more than $140 billion in revenue. Facebook generated $84 billion.

Some efforts toward ethical AI have failed specifically because of the lack of accountability. Google recently fired an engineer who claimed that an unreleased AI system had become sentient. The company confirmed he violated employment and data security policies.

Andrew NG said, “We have seen AI providing conversation and comfort to the lonely. We have also seen AI engaging in racial discrimination. Yet the biggest harm that AI is likely to do to individuals in the short term is job displacement, as the amount of work we can automate with AI is vastly larger than before. As leaders, it is incumbent on all of us to make sure we are building a world in which every individual has an opportunity to thrive.”

Ethics is a set of moral principles that help us discern right from wrong.

The important question of how human designers, producers, and users should act in order to minimize any ethical harms that AI may cause in society—harms that may result from subpar (unethical) design, unsuitable application, or misuse—is at the heart of AI ethics.

There are three subfields of ethics: Meta-ethics, Normative Ethics, and Applied Ethics. AI ethics is a subfield of Applied Ethics. Applied ethics, also called Practical Ethics, is the application of ethics to real-world problems.

AI Ethics asks what actions should be taken by creators, producers, regulators, and operators to reduce the ethical risks that AI poses to society.

Ethics of AI: Major Themes

1.Bias

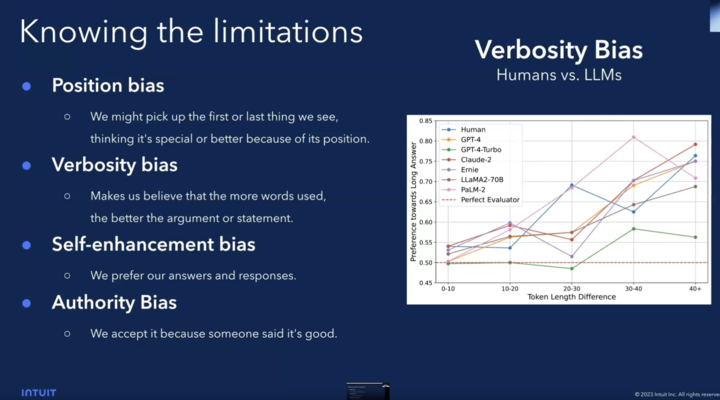

Humans create AI, which means it is susceptible to bias. Algorithmic bias is a lack of fairness caused by a computer system’s performance.

AI models have demonstrated their ability to replicate and institutionalize bias. In 2015, Amazon found out that the algorithms they used for shortlisting resumes were biased against women. The model was trained based on resume submissions over the past decade where most of the applicants were men, so it favored men over women. An AI model called COMPAS, which is used across the United States to predict recidivism, was found to be biased against black people.

Human bias has been extensively researched in psychology for many years. It results from an implicit association that reflects a bias that we are unaware of and how it can influence the outcome of an event.

Human prejudices have been found to contaminate algorithms and data through AI in recent years. In order to avoid bias in AI systems, we must understand the underlying causes. Read more on AI bias in one of our previous posts.

2.Transparency in AI

One reason why people might fear AI is that AI technologies can be hard to explain.

An AI transparent system solves the “black box dilemma” when technology must explain how it reached its conclusions.

Organizations don’t always have the expertise to create and maintain fair and accurate AI-based systems. For most companies, the supply chain for AI is very long and complicated.

Transparency can be difficult to achieve with modern AI systems, particularly those based on Deep Learning systems. Deep Learning systems are built on artificial neural networks (ANNs), which are a collection of interconnected nodes inspired by a simplified model of how neurons connect in the brain. A feature of ANNs is that once trained with datasets, any attempt to examine the internal structure of the ANN to understand why and how the ANN makes a particular decision is nearly impossible. These systems are known as “black boxes.”

To make models more transparent, the following can be done:

Make use of simpler models. This, however, frequently sacrifices accuracy in favor of explainability.

Combining simpler and more complex models. A sophisticated model allows the system to perform more complex computations, but a simpler model can provide transparency.

Inputs should be modified to track relevant input-output dependencies. It is possible that input manipulations may have an impact on classification if they change the overall model results.

3. Privacy

This is based on the public and political demand to respect a human’s personal information. Data privacy, data protection, and data security are frequently brought up when discussing privacy.

For instance:

The biggest concern for now: Personal Data

Corporations collect personal data for profit and profile people based on their behavior (both online and offline). They know more about us than ourselves or our friends — and they are using and making this information available for profit, surveillance, security, and election campaigns.

One of the major practical difficulties is to actually enforce the regulation, both on the level of the state and on the level of the individual who has a claim. Hence, aside from government regulation, each company developing AI should prioritize privacy and generalize their data so that individual records are not stored. An example of a legal framework that ensures data protection and privacy is

GDPR

The General Data Protection Regulation (GDPR) is a legal framework that sets guidelines for the collection and processing of personal data from individuals who live in the European Union. It was developed in 2016 to give people more control over their data while protecting their personal information.

4. Safety

The first question to ask about any technology is whether it works as it should. Will AI systems deliver on their promises, or will they fail? What will be the consequences of their failures if and when they occur?

Take the example of self-driving cars. Each decision the car makes (whether to turn, stop, slow, increase speed) is all governed by neural networks. Neural networks are black box models that are hard to interpret.

The British political consulting firm, Cambridge Analytica, was revealed to have harvested the data of millions of Facebook users without their consent in order to influence the US elections, raising concerns about how algorithms can be abused to influence and manipulate the public sphere on a large scale.

Several companies today like Meta and Tiktok heavily focus on attracting audience attention on their platforms because ads account for a nice chunk of their revenue. In order to maximize people’s attention, they optimized their algorithms by gathering information and recommending content through AI.

An MIT research revealed that tech giants are paying millions of dollars to the operators of clickbait pages, putting the world’s information ecosystems at risk.

To ensure that your AI system operates safely, you must prioritize the technical objectives of accuracy, reliability, security, and robustness.

In order to ensure responsible AI development, companies must take two important steps:

Focus on Interpretability

Complex AI algorithms allow organizations to unlock insights from data that were previously unattainable. The black box nature of these systems, however, makes it difficult for business users to comprehend the reasoning behind the choice.

People must be given the ability to “look under the hood” at AI systems’ underlying models, investigate the data used to train them, reveal the reasoning behind each decision, and promptly provide coherent explanations to all stakeholders in order to foster trust in these systems.

Explanations and interpretability quantify each attribute’s contribution to the output of a Machine Learning model. These summaries help enterprises understand why the model made the decisions it did.

Develop an Industry-specific Ethical Risk Framework for Data and AI

A good framework should include, at the very least, a statement of the company’s ethical standards, which should also include its ethical nightmares, governance structure, and a statement of how that structure will be maintained in the face of shifting personnel and conditions. Setting up KPIs and a quality assurance program is crucial for monitoring how well your strategy’s tactics are still working.

A strong framework also demonstrates how operations incorporate the reduction of ethical risk. Every company should check to see if there are procedures in place for screening out biased algorithms, privacy violations, and puzzling results.

What does the future hold for ethical AI?

As AI develops, a more inclusive, sustainable world will be created, benefiting the global society and the economy. AI ethics will evolve based on the future we want.

Some of the biggest ethical AI organizations are AlgorithmWatch , Defense Advanced Research Projects Agency (DARPA) , and National Security Commission on Artificial Intelligence (NSCAI) . These organizations produce interdisciplinary research and public engagement to help ensure that AI systems are accountable to the communities and contexts in which they’re applied.

The important ethical crux to remember is that the future can and must be fought for. Nothing is predestined; everything is determined by the choices we make along the way.

Ethical concerns about Al systems apply to all stages of the Al system lifecycle – research, design, development, deployment, and use – including maintenance, monitoring, and evaluation. Furthermore, Al actors include natural and legal persons involved in at least one stage of the Al lifecycles (i.e., researchers, programmers, data scientists, users, and companies). This is an ambitious task that cannot be ignored.

The 57% Hallucination Rate in LLMs: A Call for Better AI Evaluation Author: Max Milititski

In a recent webinar, we showed how Tasq.ai’s LLM evaluation analysis led to a 71%

In the bustling world of ecommerce, where every click, view, and purchase leave a digital