Behind the scenes of Generative AI, where algorithms conjure art, realism, and imagination from lines of code, a quest for truth is unfolding.

Researchers who once had a high degree of certainty when it came to traditional machine learning, now find themselves grappling with ambiguity and uncertainty over the quality and performance of their generation models.

Releasing something into the real world that cannot be vouched for, cannot be controlled, and can do tremendous damage, is the nightmare of any researcher.

The frustration experienced by GenAI researchers stems largely from the absence of clear evaluation measures in a field where the concept of “ground truth” is often elusive.

Current solutions to gain visibility into generated content include proxy measures and human committees. But they offer only fleeting glimpses, fragments of the whole.

Join us as we uncover the methods to unveil the truth – if, and how much of it exists – behind generated output. We’ll explore various methods and approaches for evaluating different data types, while we delve into the proxy measures used for images specifically. We’ll also examine Tasq.ai’s novel approach which provides a heightened level of accuracy and assurance when it comes to evaluating these images.

In standard ML, models are rigorously assessed based on well-defined metrics like accuracy or F1-score. However, in GenAI, where the primary goal is the generation of output that is true to the intention of the prompt and is of sufficiently high quality, traditional evaluation methods fall short. Instead, researchers resort to proxy measures or internal committees to review results, which just don’t give the same certainty in your model.

One of the foremost challenges in evaluating generated images is the absence of a clear and unambiguous ground truth. In traditional ML tasks, such as classification or regression, labels for the training data are readily available. However, in GenAI, where images are generated without explicit labels, determining what constitutes a “correct” output becomes a complex task.

This lack of ground truth makes it challenging to apply conventional evaluation methods directly, and highlights the need for alternative approaches.

While the most accurate way to evaluate GenAI results is via human feedback, this is costly, slow, and one has to create an additional flow again for different use cases.

Another significant challenge lies in the inherent subjectivity of evaluating image quality. While humans can readily assess whether an image looks realistic or not, quantifying this judgment objectively is far from straightforward.

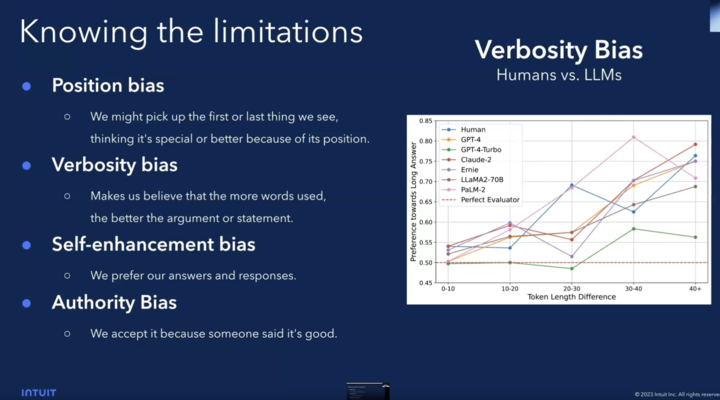

What’s more, different individuals may have varying opinions on what constitutes a high-quality image, or what an image “should” look like, introducing subjectivity and potential biases into the evaluation process.

For example, let’s say you have an editing task. You’ve been given an image, and you want the person in it to smile more. You’ll want to know the following critical information about your output:

Evaluating the diversity and novelty of generated images presents yet another hurdle in GenAI. Models that produce a limited set of repetitive outputs, known as “mode collapse,” can sometimes achieve high scores on traditional evaluation metrics, even though they fail to capture the essence of creative image generation. Ensuring that a model generates a wide range of novel and unique images is essential for its real-world applicability, but measuring these aspects remains a thorny problem.

To address the challenges of evaluating image generation, researchers have turned to proxy measures. Perceptual metrics, such as the Inception Score and Fréchet Inception Distance (FID), have gained popularity. These metrics attempt to quantify image quality by assessing how closely generated images match the statistical properties of real images. While they provide valuable insights, perceptual metrics have their limitations. For example, generated images might still fail to capture the user’s intent or quality requirements, even while achieving high scores. Put differently, a high score might still be assigned to an undesirable result, which to some degree defeats the whole purpose.

A high-level look at these proxy measures can help demonstrate the pitfalls they present:

Inception Score: this is computed using a pre-trained deep neural network called Inception. A set of images is generated using a generative model. Then, these generated images are fed through the Inception network to get a probability distribution over classes for each image. The Inception Score is essentially a combination of Image Quality (how well the generated images are classified into meaningful categories) and Image Diversity (the diversity of generated images arrived at by calculating the entropy of the class probabilities).

Fréchet Inception Distance (FID): also using the Inception network, FID uses the statistical properties of feature representations – calculating the distance between the means and covariances of feature vectors extracted from real and generated images.

These methods, by definition, don’t capture errors like mode collapse (e.g. a limited set of similar images can result in a high Inception Score) or other cases of limited diversity. Inception Score is known to show a bias towards easily classifiable objects in some cases, and FID can be sensitive to small variations because it operates in a high-dimensional feature space.

Moreover, as touched on previously, both these methods are not designed to assess whether a generated image is in line with the user’s intent and quality requirements. They also lack a deep contextual understanding of images.

To finish with an example, let’s say you have a model trained to output images of animals. Due to mode collapse, it starts only creating images of cats. The Inception Score might be very high – the Inception network correctly identifies these as cats – however from a quality, diversity, and user intent perspective, this model is unsuccessful.

In reality, researchers often use a combination of tools and metrics in addition to perceptual metrics, including human evaluation, task-specific metrics, domain-specific criteria and real-world testing and feedback.

Yet another category of proxy measures focuses on matching the distributions of generated and real data. Metrics like Wasserstein Distance and Maximum Mean Discrepancy (MMD) offer ways to quantify the similarity between these distributions. While distribution matching metrics can be useful for evaluating image generation models, they also come with trade-offs and may not provide a comprehensive assessment of image quality in all scenarios.

Given the limitations of automated metrics, human evaluation remains a crucial component of image generation assessment. Human evaluators can provide valuable judgments on image quality, diversity, and realism. For example, in many cases, humans can detect humans better than AI. However up until now, human evaluators have introduced problems of scale, elasticity, and bias.

Self-supervised learning techniques have emerged as a promising approach to address the challenges of image generation evaluation by using the generated data itself to create proxy labels for evaluation.

GANs can be used not only for image generation but also for evaluation. Techniques like the Generative Adversarial Metric (GAM) leverage the adversarial nature of GANs to create discriminative models that can assess the quality of generated images. This approach offers a more principled way to evaluate GANs and other generative models.

Another promising avenue in image generation evaluation involves the use of transfer learning. Pre-trained models, such as deep neural networks trained on large-scale datasets, can be fine-tuned for evaluation tasks. This allows researchers to leverage the representational power of these models to develop robust evaluation metrics.

Tasq.ai’s unique system enables the incorporation of output evaluation with high accuracy, super-short SLAs, and feedback from any country and at any scale.

The unique, scalable solution allows for objective human judgments to be aggregated into a single, accurate confidence score that enables the improvement of generated data prior to production.

Millions of Tasqers from over 150 countries carry out simultaneous Human-in-the-Loop workflows to validate and correct the realism of data generated, without bias, thanks to distributed geographic input.

The components that make this possible include:

A great example of this is Tasq.ai’s collaboration with BRIA, a holistic visual generative AI solution that’s partnered with the likes of Getty Images, Nvidia, McCann and others.

BRIA have developed a quality system based on the Tasq.ai platform to provide them with:

You can see more in-depth details around the challenges faced and the solutions created in the Webinar hosted by Tasq.ai and BRIA, “Measuring quality of generative AI using multi-national crowds”.

While the field of generative AI is quickly gaining mainstream traction, there are still significant challenges from a technological perspective – perhaps chief among these being how output is evaluated.

We’ve explored various ways these challenges are being addressed, noting in particular how Tasq.ai’s solution is the only way to effectively bridge the critical human and non-human evaluation methods.

To learn more, get in touch with the Tasq.ai team today.

In standard ML, models are rigorously assessed based on well-defined metrics like accuracy or F1-score. However, in GenAI, where the primary goal is the generation of output that is true to the intention of the prompt and is of sufficiently high quality, traditional evaluation methods fall short. Instead, researchers resort to proxy measures or internal committees to review results, which just don’t give the same certainty in your model.

One of the foremost challenges in evaluating generated images is the absence of a clear and unambiguous ground truth. In traditional ML tasks, such as classification or regression, labels for the training data are readily available. However, in GenAI, where images are generated without explicit labels, determining what constitutes a “correct” output becomes a complex task.

This lack of ground truth makes it challenging to apply conventional evaluation methods directly, and highlights the need for alternative approaches.

While the most accurate way to evaluate GenAI results is via human feedback, this is costly, slow, and one has to create an additional flow again for different use cases.

Another significant challenge lies in the inherent subjectivity of evaluating image quality. While humans can readily assess whether an image looks realistic or not, quantifying this judgment objectively is far from straightforward.

What’s more, different individuals may have varying opinions on what constitutes a high-quality image, or what an image “should” look like, introducing subjectivity and potential biases into the evaluation process.

For example, let’s say you have an editing task. You’ve been given an image, and you want the person in it to smile more. You’ll want to know the following critical information about your output:

Evaluating the diversity and novelty of generated images presents yet another hurdle in GenAI. Models that produce a limited set of repetitive outputs, known as “mode collapse,” can sometimes achieve high scores on traditional evaluation metrics, even though they fail to capture the essence of creative image generation. Ensuring that a model generates a wide range of novel and unique images is essential for its real-world applicability, but measuring these aspects remains a thorny problem.

The 57% Hallucination Rate in LLMs: A Call for Better AI Evaluation Author: Max Milititski

In a recent webinar, we showed how Tasq.ai’s LLM evaluation analysis led to a 71%

In the bustling world of ecommerce, where every click, view, and purchase leave a digital